5G is in the news as competing operators roll out deployments in a race to have the fastest service in the most places. At Ookla®, we’ve observed astonishing 5G speeds that live up to the hype. But 5G headlines can be confusing for consumers who are trying to sift through conflicting claims that are often based on limited (if not deeply flawed) data. 5G as a technology is also rapidly evolving, which makes sound data collection that addresses the nuances of these shifts even more important.

As the industry leader in internet testing, Ookla has over 15 years of experience measuring emerging technologies. We’re here to share some lessons learned about what goes into making an authoritative claim that consumers can rely on. We’ll also look closely at some recent examples from the United Kingdom where a multitude of methodological shortcomings from unseasoned data providers have led to unreliable network claims. While this article is focused on U.K. examples, the same methodology critiques apply to active 5G claims from the same data providers in other markets.

Poor data leads to spurious claims, examples from the U.K.

Operators are eager to use designations like “fastest,” “best coverage” and “most improved” because these messages resonate with customers. But without proper data and careful analysis backing them, these labels are meaningless and potentially misleading. Ookla has a team of data scientists dedicated to ensuring our claims about networks are accurate, representative and fair.

In recent months we’ve seen a series of conflicting claims being made in the U.K. market. These claims are not based on robust, proven data collection and processing practices. As a result, consumers are being misled about which operators are truly providing the best experience.

Poor 5G identification muddles what’s being measured

As 5G is an emerging technology, it needs to reach a certain level of both adoption and maturity before comparative performance claims can be made in new markets. The U.K. is one such hotbed of 5G activity where every operator is racing to be crowned the best. This well-intended desire of operators to show off new capabilities has led to dubious claims being made in the market. Namely, competing operators are making similar claims about their network capabilities, while differing data providers are publishing reports based upon very limited and flawed data. This can lead consumers to make decisions based on questionable reporting — or to purchase services that aren’t even available in their area.

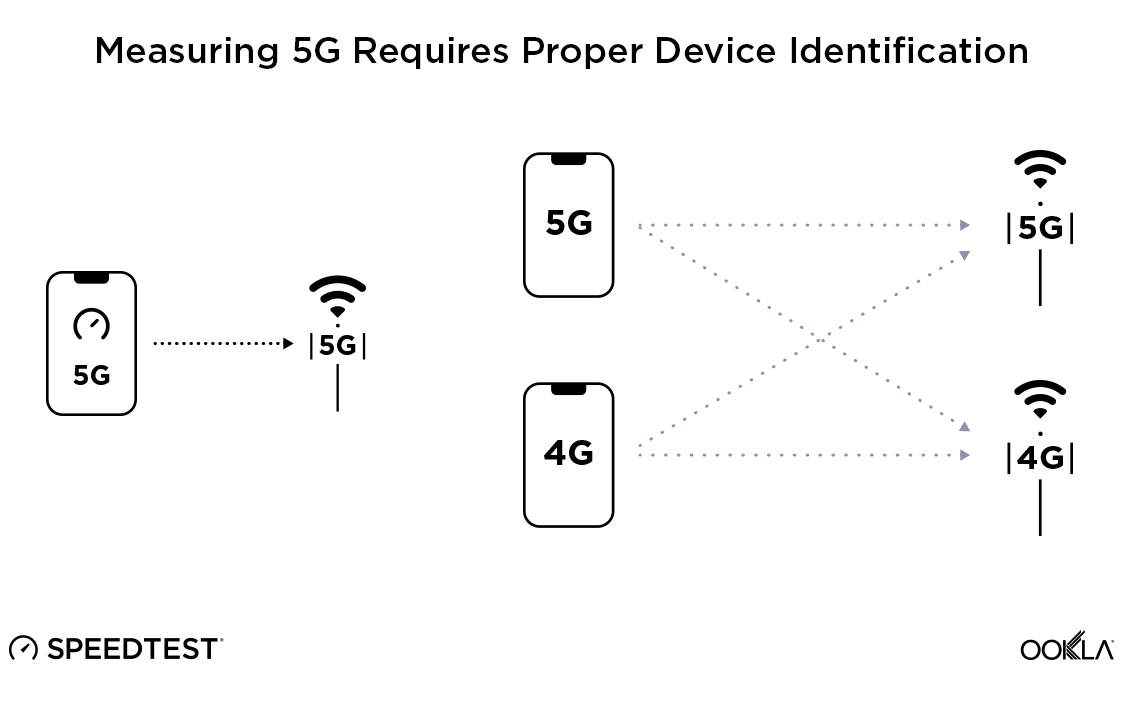

Not all 5G-capable devices natively identify 5G when reporting the connection type to applications. That’s why Ookla has directly partnered with device manufacturers worldwide to implement accurate in-app 5G detection in Speedtest®. Data providers who do not couple robust in-app detection with strict filtering criteria are highly likely to mis-identify a wide range of 5G connections and ultimately show speeds that do not accurately reflect the network’s actual 5G service capability.

Failure to measure full throughput undervalues high-speed connections

Modern technologies like 5G are capable of achieving gigabit speeds and beyond. Unless a testing solution is capable of measuring the full throughput of a user’s connection, measured speeds will not reflect the network’s real-world performance. The Ookla Speedtest Methodology uses a distributed network of servers to fully maximize a connection in the foreground, on demand, that can accurately measure speeds up to 10 Gbps. Other data providers like Opensignal, nPerf and umlaut either test to remote CDNs, test in the background or — worse still — hide their tests in third-party apps that are completely unrelated to network diagnostics.

The Opensignal methodology allows for a blend of testing methods including background tests, download size-capped tests and foreground customer-initiated tests with low usage rates. However, their public reporting consistently makes no distinction between results derived from these differing methodologies and presents them as a simple national average. This mix of methodologies introduces the possibility that any reported differences in download speed among operators may be due to differences in testing and not due to differences in the actual services that they provide.

Opensignal’s test methodology also tends to seriously undervalue the full capability of networks. Because a significant amount of demand must be generated on the network before 5G carrier aggregation can even take effect, shorter-duration and background tests are incapable of ensuring that the network assigns a sufficient number of component carriers to the user equipment (UE) to trigger a 5G connection. Data providers that only pass along a small file do not fully saturate a network connection and often cannot place enough demand on the network to trigger a 5G connection, leaving devices — and speeds — stuck registering 4G.

For example, Opensignal recently reported that the average 5G download speed in the U.K. was 130.1 Mbps. Ookla’s data for that same time period, on the other hand, reported a dramatically higher mean download speed of 187.49 Mbps (95%CI [185.57, 189.42]). This range of 185.57-189.42 Mbps is a 95% confidence interval, which represents the range of values in which the true value is likely to be. Ookla’s large sample sizes combined with rigorous statistical testing ensure that all claims that we make stand on solid ground. To their credit, Opensignal also includes uncertainty in their reported speeds, but because it is unclear — though important — what their confidence intervals represent, we cannot compare them directly.

If networks wish to demonstrate that they truly are the fastest, they must employ methodologies that test using the full capacity of the connection, rather than a small file passed to a distant server. This is especially true when considering the high speeds achieved with 5G. Put another way: Verifying the performance of a 5G network with Opensignal’s methodology is akin to measuring the volume of water in the Thames with a bucket.

Inadequate sample sizes falsely represent reality

Data providers with small test volumes from a limited number of locations do not have the statistical power needed to draw sound conclusions about network performance.

As the industry’s most trusted source for consumer throughput testing, Speedtest users performed over 19.4 million tests daily during Q3 2020. We see real-time results on almost every mobile and fixed broadband network in the world — including just about every device make and model — which provides an unmatched view of how the internet is currently performing at a global scale.

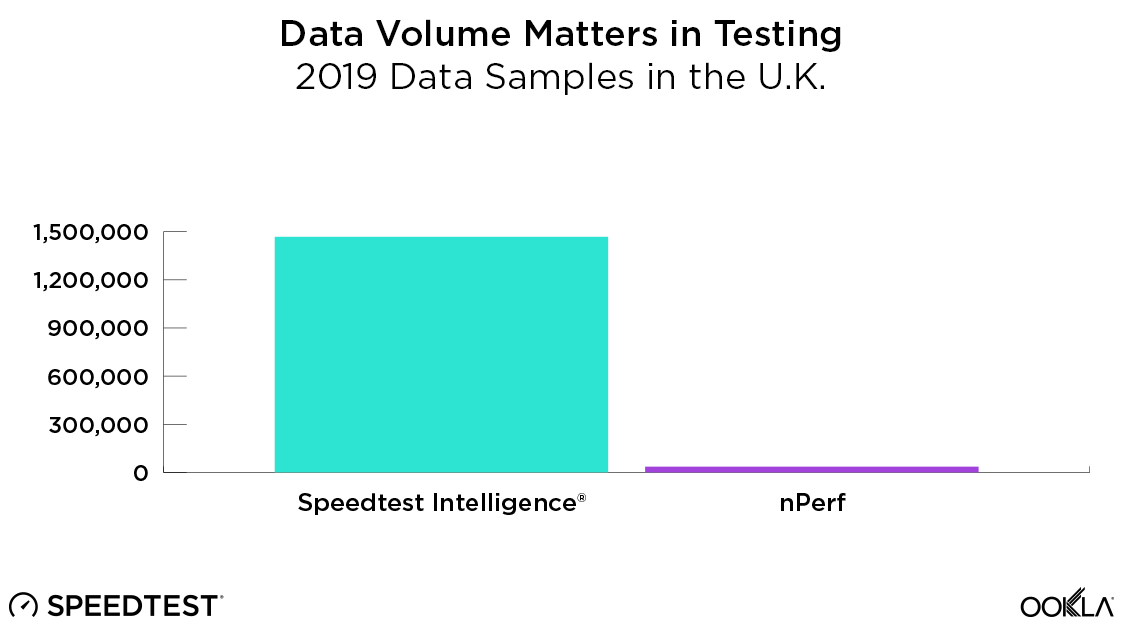

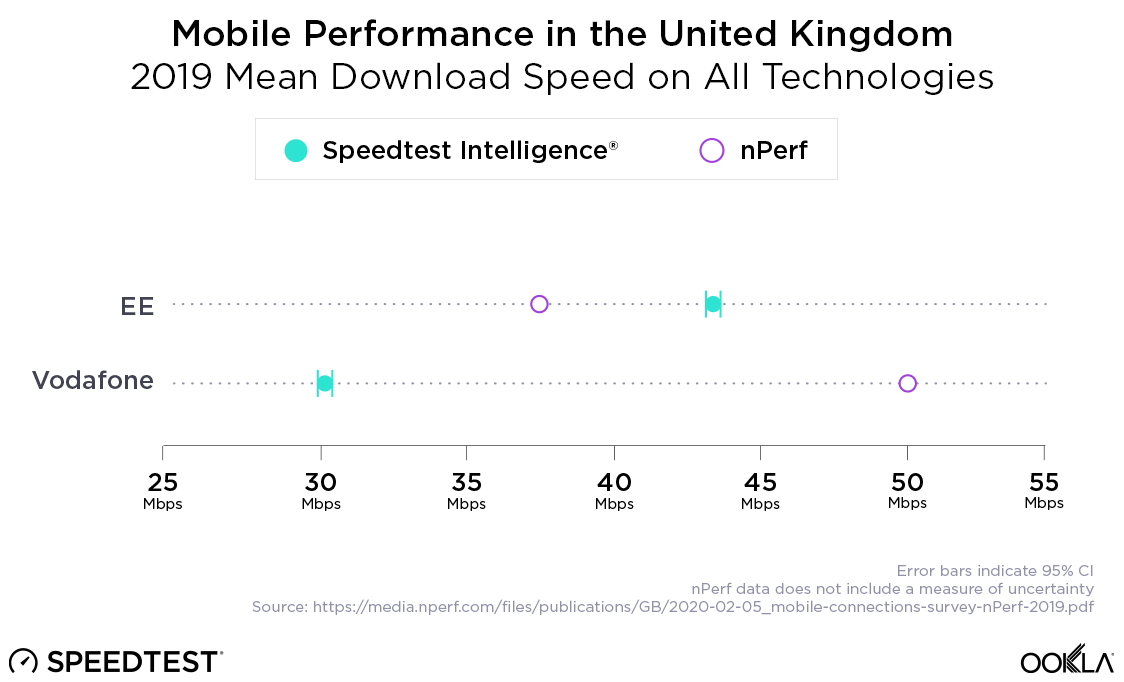

For example, nPerf published a U.K. report which was based on less than 36,000 test samples taken over a 12-month period. For comparison, Ookla collects over 15,000 tests every day in the U.K. It took Ookla less than three days to collect the same amount of data that nPerf collected in a year. Generally speaking, lower sample counts tend to produce results with much less statistical certainty. This particular report from nPerf showed wildly different results than all others released in the market — unsurprisingly, given their limited sample size. This put Vodafone on the defensive as rivals ran TV ads to debunk nPerf’s claim, which was meant to bolster Vodafone’s network offerings.

Drive testing misses areas where users are most likely to connect

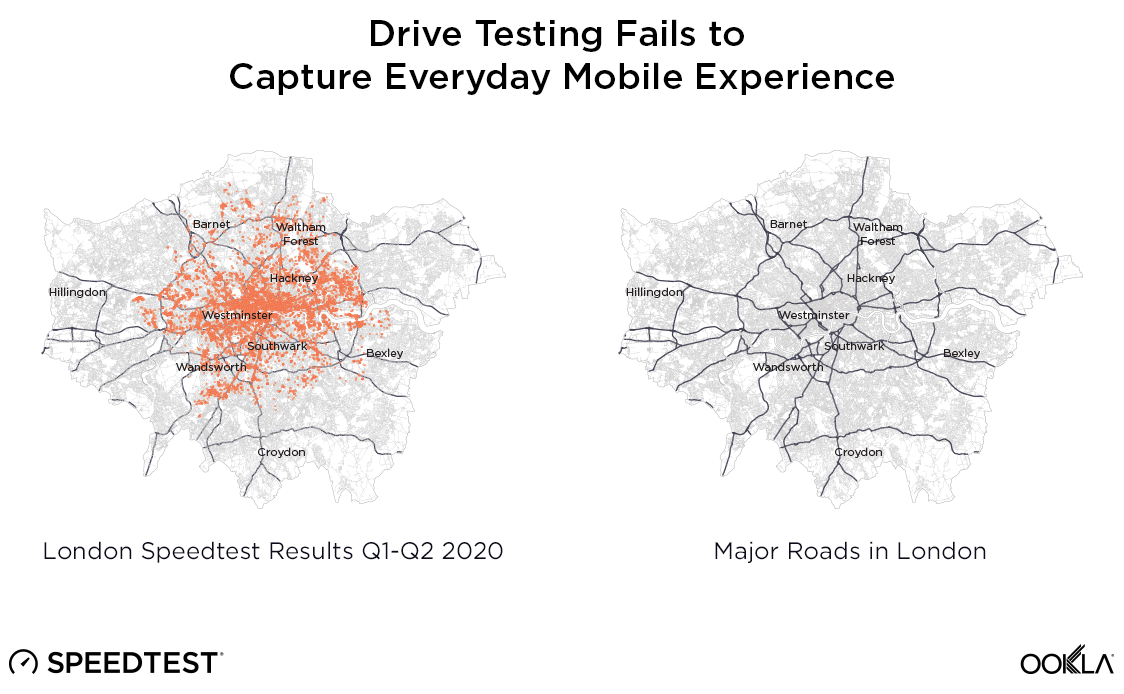

Driving pre-configured test equipment on pre-configured routes in static, lab-like conditions has been a staple of network reporting for a long time. While drive testing removes several potentially confounding variables, this data is not representative of real users’ experiences. Using drive testing as a proxy measurement of user experience always proved troublesome, given the optimized nature of the pre-selected routes and equipment involved. When you take into consideration that 5G is an emerging technology — with limited network coverage and limited device penetration — using drive testing as a tool to back claims about how a customer may experience a 5G network is disingenuous, at best.

5G speeds are only important to measure where customers actually have access to that service. Both umlaut and Rootmetrics have reported on 5G performance in London based on drive testing results. Only the Rootmetrics report included an availability metric, which showed that while Vodafone was slightly faster in London, their 5G signal was only available on 5% of the route driven — compared to 28% for EE. Narrow measurements taken from a small number of devices, in cars, in a test environment, on a small number of routes over a small time period do not provide a sufficient sample to underpin the claim of “fastest 5G” in one of the world’s foremost cities.

Each time a user takes a Speedtest, a snapshot of the internet is captured for that specific time, place, device and network. Because these tests are initiated by consumers when and where they need performance data, Speedtest gives users accurate information about internet speeds at the times and locations that are important to them. When aggregated, these measurements describe the network’s real-world, round-the-clock performance and its ability to meet the needs of its users. Our volume of data allows us to accurately describe and compare performance, including how much time consumers spend connected to 5G. For example, we can look at results across all devices to quantify the performance that the average user is experiencing or narrow our focus to 5G-capable devices, which allows us to fully assess the high-end capabilities offered in modern deployments.

Poor data science causes untrustworthy results

Other telecommunications data providers each have their own indices that they use when awarding “winners.” Unfortunately, most of these are based upon unclear and, at times, unsound ranking systems. From nPoints to Rootscores, compound scores hide an operator’s actual performance from consumers who simply want to know what performance they can expect. Arbitrary data periods seem altered to suit the highest bidder among network operators, device definitions are unclear, and data samples are minuscule. This produces the desirable commercial outcome for the data providers, but it does not give consumers transparent information about which operator will actually serve them best.

Basing claims on flawed data confuses consumers. Worse still, conflicting or misleading claims can substantially degrade a customer’s perception and trust in their network operator. Basing regulatory policy on this flawed data can lead to devastating consequences, such as poorly allocating government funding and deepening the digital divide for underserved populations, particularly in a world where we are all relying on connectivity more than ever before.

Good data makes for justifiable claims

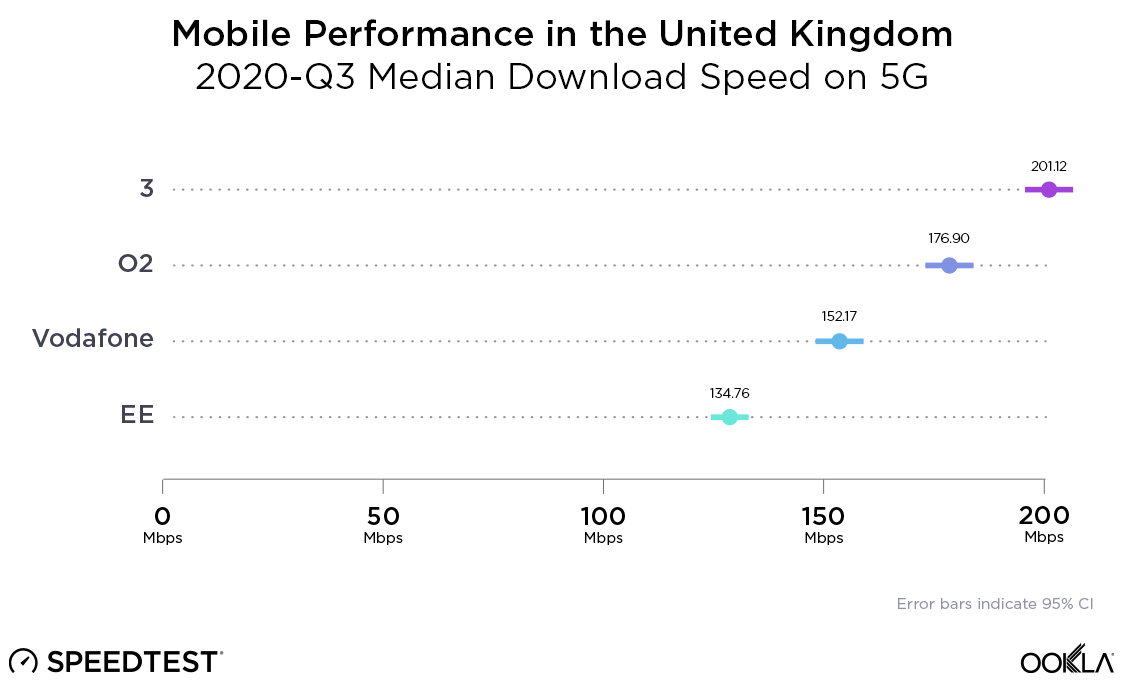

As we found in our recent U.K. market analysis, the 3 network has by far the fastest median download speed over 5G in the U.K. This analysis is based on over 60,000 Speedtest results taken over 5G by more than 16,000 devices in the U.K. during Q3 2020. In all, there were over 500,000 samples of Speedtest results in the U.K during Q3 2020. EE shows the highest Time Spent on 5G by 5G-capable devices at 10.9%, with 3 coming in at a distant second and Vodafone third.

Squandering marketing budgets to promote claims based on unsound data or unsound methodologies helps no one — not the customer, the operator or even the company providing the unsound claim. When Ookla stands behind a claim of “fastest” or “best” network in a country, we do so only when a strict set of conditions has been met. Being a trusted source of consumer information was our first function and it remains the driver behind our mission.

To learn more about the data behind this article and what Ookla has to offer, visit https://www.ookla.com/.

Sign up to receive our stories in your inbox.

Data is changing the speed of business. Investors, Corporations, and Governments are buying new, differentiated data to gain visibility make better decisions. Don't fall behind. Let us help.

Sign up to receive our stories in your inbox.

Data is changing the speed of business. Investors, Corporations, and Governments are buying new, differentiated data to gain visibility make better decisions. Don't fall behind. Let us help.